Multiple users are reporting SEO issues with the latest update of the popular WordPress caching plugin W3 Total Cache (W3TC) released late last week.

We have tested and confirmed both the presence of the code blocking Google (and other search engines), as well as the circumstances in which Google will be blocked.

Impact

Users on SEO and WordPress forums are reporting that the new code is causing issues in Google's Mobile-Friendly Test Tool, resulting in submitted URLs failing the test.

CSS and JS files are used by Google to render the content found on URLs. Blocking Google from these important site resources could potentially have a negative SEO impact.

We can see here for example that without access to these resources Google are unable to confirm if a page is mobile-friendly or not.

The impact could be more significant than this however, potentially leading to both crawling and indexing issues for sites affected - which could in turn affect rankings.

What is happening?

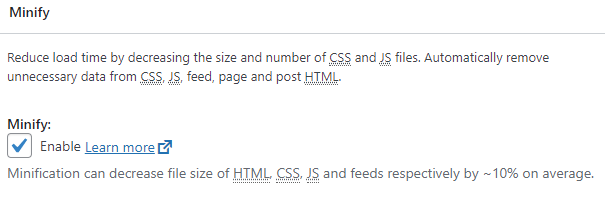

The latest W3TC update adds a new instruction to a site Robots.txt file blocking all user agents, including Google, from the cache directory - specifically when the CSS/JS minification option is enabled in the plugin settings.

When minification is enabled, site resources are served from the /wp-content/cache/ directory - which has been blocked from crawling by the latest update.

All In One SEO Plugin Conflict

There are also reports that the newly created robots.txt file is conflicting with the virtual robots.txt file created by the All In One SEO (AIOSEO) plugin if users also have that installed on their site.

The physical file created by W3TC is overwriting/taking precedence the virtual file originally put in place by All In One SEO.

Robots.txt

You can see the new instruction set below (enclosed between two comments):

# BEGIN W3TC ROBOTS

User-agent: *

Disallow: /wp-content/cache/

# END W3TC ROBOTSCode language: plaintext (plaintext)We confirmed the presence of this code in the latest plugin update via the W3TC GitHub repository and live on a test site.

Although the robots.txt instruction appeared straight away, files were not moved into the /cache/ directory until we turned on the minification option.

Solution

Disabling minification in the plugin options (and flushing all caches) should move the site JS/CSS files back out of the cache directory.

If you don't want to turn off minification, an alternative temporary fix would be to simply to remove or comment out the lines in your site's Robot.txt file - though the issue could recur potentially depending on how W3TC handle this.

Hopefully once W3TC realise their error - in response to what I expect will be a lot of negative feedback from the SEO/webmaster community - they will remove this from future versions of the plugin.

For users that are also having a conflict with the All In One SEO plugin, the best fix would probably be to downgrade to the previous version of W3 Total Cache and turn off automatic updates until the issue is resolved.

Update/Patch

W3TC released an updated version of the plugin (2.1.8) to fix the issue.

If you update your plugin (or have automatic plugin updates enabled) the problem should be resolved.

To check simply visit your robots.txt file and confirm the disallow rule is no longer in place.